Datacenters, including the “cloud”, store humanity’s digital big data on hard disks and magnetic tapes whose limited lifespan requires expensive copies to be made every five to seven years; they devour resources such as land, electricity, water and scarce materials. In comparison, storage at a molecular scale in a polymer such as DNA could have a density ten million times higher, last ten thousand times longer without the need for periodic copying, and consume very little energy. Indeed, DNA is stable at ordinary temperatures for several millennia and can be easily duplicated or deliberately destroyed. The required technologies already exist. However, in order to become viable for information archiving, they must be developed further. This could be achieved within five to twenty years, and would be facilitated by synergies between the public and private sectors.

The storage and archiving of digital big data by the current approach based on datacenters will not be sustainable beyond 2040. There is therefore an urgent need to focus sustained R&D efforts on the advent of alternative approaches, none of which are currently mature enough.

The Global DataSphere (GDS) was estimated in 2018 to be 33 thousand billion billion (33×1021) of characters (bytes), which is of the same order as the estimated number of grains of sand on earth. This data comes not only from research and industry, but also from our per- sonal and professional connections, books, videos and photos, medical information. It will be further increased in the near-future by autonomous cars, sensors, remote monitoring, virtual reality, remote diagnosis and surgery. The GDS is increasing by a factor of about a thousand every twenty years.

Most of this data is then stored in several million datacenters (including corporate and cloud datacenters), which operate within transmission networks. These centers and networks already consume about 2 % of the electricity in the developed world. Their construction and operating costs are globally in the order of one trillion euros. They cover one millionth of the world’s land surface and, at the current rate, would cover one thousandth of it by 2040.

The storage technologies used by these centers are rapidly becoming obsolete in terms of format, read/write devices, and also of the storage medium itself, which requires making copies every five to seven years to ensure data integrity. They also pose increasing problems in the supply of scarce resources such as electronic-grade silicon.

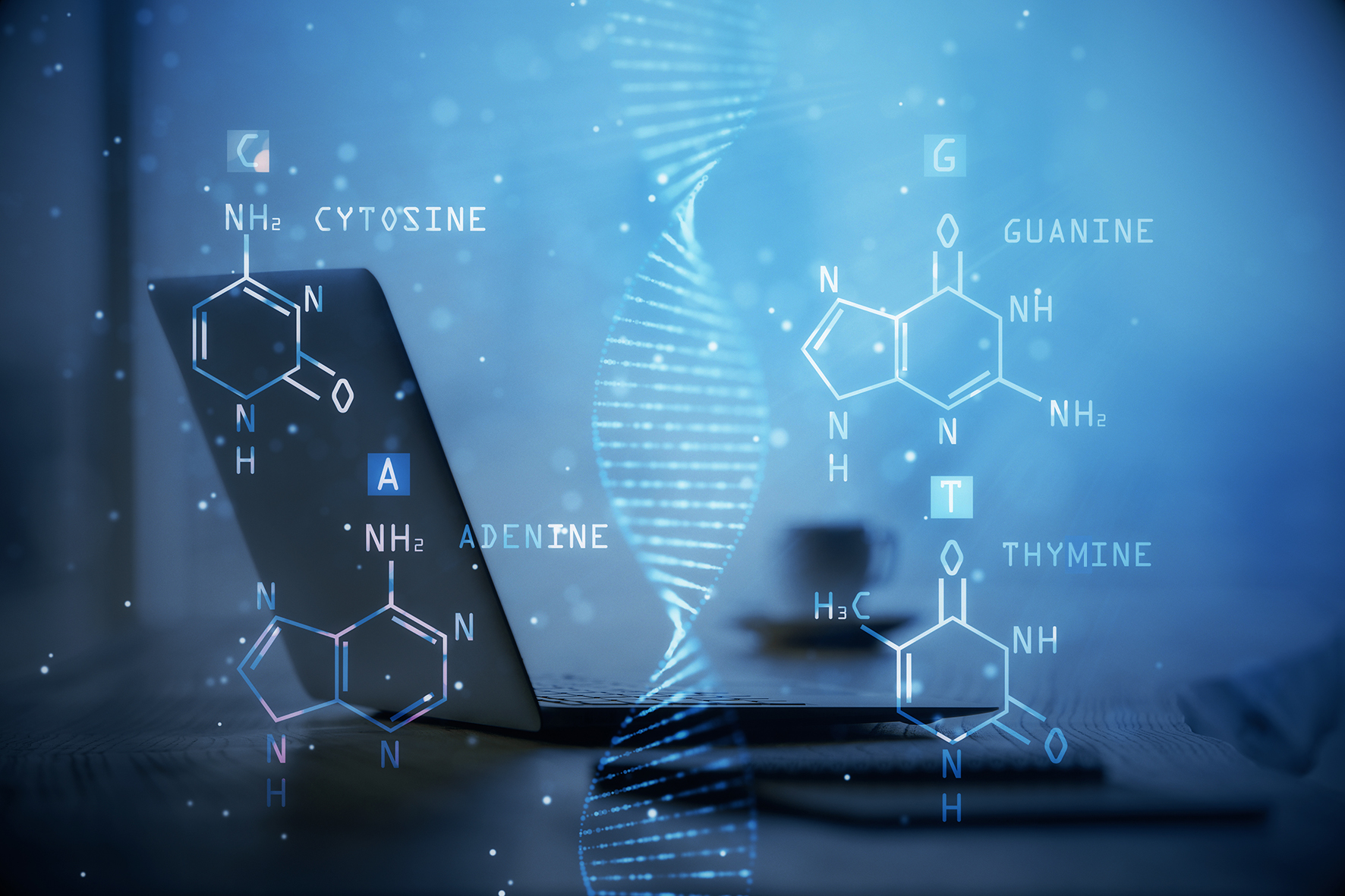

An attracting alternative is offered by molecular carriers of information, such as DNA (used as a chemical rather than a biological agent) or certain, very promising, non-DNA heteropolymers. In principle, DNA should allow information densities ten million times higher than those of tradi- tional memories: the entire current GDS would fit in a van. DNA is stable at ordinary temperature for several millennia without energy consumption. It can be easily multiplied or destroyed at will. Some calculations can be physically implemented with DNA fragments. Finally, DNA as a support will not become obsolete because it constitutes our hereditary material.

To archive and retrieve data in DNA, five steps must be followed: coding the binary data file using the DNA alphabet which has four letters, writing, storing, reading that DNA, and finally decoding the information it contains. A prototype performing these steps has been in operation since March 2019 at Microsoft Corp. in the United States. The performance of such prototypes needs improving by several orders of magnitude to become economically viable: about a thousand-fold for the cost and speed of reading, and 100 million-fold for the speed of writing. These factors may seem staggering, but this would be to ignore the speed of progress in DNA technologies which increases at close to a factor of one thousand every five years — much faster than in the electronic and computer fields.